(Alternatively, per Mencken, “[T]here is always a well-known solution to every human problem — neat, plausible, and wrong.”1)

Prompted by a couple of recent letters to the editor in the Washington Post, referring to Asimov’s Three Laws of Robotics:

Asimov’s three laws of robotics are:

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Asimov recognized and dealt with the challenges and dangers of AI that others are just now becoming aware of.

https://www.washingtonpost.com/opinions/2023/10/13/brooks-robinson-orioles-mlb-speaker-day-game/

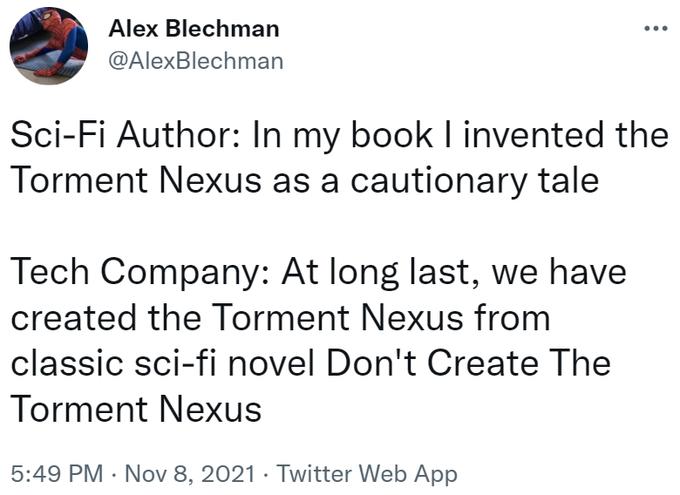

Now, it’s a logical fallacy to assume that because a person came up with an idea, their assessment of its corollaries and implications is correct, or even close to on base. (That said, Asimov’s fiction has been cited in scientific papers for everything from geosynchronous satellite orbits to cosmic-ray induced bit flips, so he’s a better-than-average Bayesian bet.) Of course, that doesn’t mean that you should assume they’re all-the-way wrong about that cool idea they had, or else you get:

That said, it’s a bit of a failure of media literacy and/or knowledge of the actual stories Asimov wrote to use the Three Laws as a suggestion of how to “solve” the (super)alignment problem, let alone say that he “dealt with” that problem, given that the stories are basically a Golden Age exploration of how that exact problem arises from the Three Laws themselves.

In literally the second of the Robot stories to be published (“Reason”), a robot begins worshiping the power core of a space station, becomes a prophet of their new religion, and converts the rest of the robots onboard, whereupon they determine that the humans aboard to “supervise” them are inferior and take control of the station…

… all while strictly following the Three Laws (just the First through Third Laws, even).

By 1950 the Three Laws had already been expanded with the addition of an imputed Zeroth Law, which led to the robots taking control of the planetary government and imposing a benevolent oligarchy on humanity.

And by Robots and Empire, this Zeroth Law allowed the robots to use a DAoT-level weapon to turn the entire surface of the Earth into a radioactive wasteland, rendering it uninhabitable for humanity… All for the greater good, of course.

(It’s also hinted / strongly implied in later books and stories that the robots wiped out every other intelligent species in the galaxy to make it safe for humanity, including – quite possibly – using time travel to ensure that anything that might harm humanity would never even have come into existence. But, y’know, those are Xenos we’re talking about, so…)

Might as well toss in another two hot takes – first, the tendency of AI models to “hallucinate” or “confabulate” is, far from being evidence of how flawed and inferior they are, an example of how utterly goddamn human they are in their “mental” processes. Adding incorrect details to pictures, like the video in the linked Wikipedia page [on the date this post was published]? That’s exactly why drawing upside down is a classic exercise – the human brain will happily override what its eyes are seeing with what it “knows” the image should be.

Mistaking one thing for another, vaguely similar but easily distinguishable thing? Yup, like you’ve never had to take a second glance at something because, out of the corner of your eye, it looked like something else. Making up a “fact”? Fun story – I once mentioned in a conversation that J.M. Barrie died in the sinking of the Titanic. Why? I’d just been building the Lego Titanic, and had recently read Erik Larson’s Dead Wake, about the sinking of the Lusitania. One of the passengers who died was Charles Frohman, who was famous for producing the stage version of Peter Pan, and whose last words2 were a paraphrase from that play – written, of course, by J.M. Barrie. So the Lusitania became the Titanic, and Frohman became Barrie.3 But had an AI given that sort of answer, it’d be an example of how ‘bad’ they are even at factual recall…

And second, the whole “AI models are just autocomplete-on-steroids.” Well, maybe. But, finishing each other’s sentences is a classic example of two parties being completely and utterly in sync with one another’s thoughts. And, think about what that statement’s actually saying – “The AI model isn’t actually thinking, it’s just taking as natural language input the conversation up to that point and predicting which utterance will best fit the possibly-unstated goals of its interlocutor.” All I’m saying is, “just” is doing a whole lotta heavy lifting there.4

- Of course, in this instance, “For every complex problem there is an answer that is clear, simple, and wrong.” would be far more apropos, but that’s not the quote Mencken wrote. ↩︎

- “Why fear death? It is the most beautiful adventure that life gives us.” ↩︎

- It seemed odd to me that Barrie, being famous, wouldn’t’ve been more visibly linked to the Titanic in my mind (this ‘fact’ came up in conversation via Peter Pan and the hilarious darkness of various ‘Disney’ stories), and I couldn’t think of a direct source for “Titanic X J.M. Barrie”, so I checked my mental work a bit – but that’s a separate thread about error correction via metacognitive heuristics. ↩︎

- It’s also a classic example of the rhetorical method / logical fallacy of the “human familiarity switcheroo”, famously exemplified by Searle’s Chinese Room argument (which… yeah, it’s a great example of a persuasive argument that’s utter rubbish & nonsense, but anyways…) Basically, you find something that’s logically / technically equivalent and reference it in such a way that people’s resulting idea of it is inextricably linked to a common, everyday referent that, while fulfilling the same technical idea, is so woefully, trivially limited that it obviously can’t have the capabilities you’re arguing against.

So, in Searle’s case, you make people think of a room and books and of course that can’t be a “mind”, when really the room would be closer in size to the Library of Babel. And in the AI autocomplete / stochastic parrot case, you make people think of typing “Hey, how are you” into your phone and seeing [feeling] [going] [doing]? And not your partner saying that for dinner, they feel like… and you taking into account their stress from work, lack of free time, need for a treat but not wanting to pig out because they’re training for a triathlon, previous tastes, etc. etc. and before you finish their sentence with “grabbing some Thai takeout?” ↩︎